Brussels, Belgium — January. AI leading researchers and cyber-security specialists warned that AI cybersecurity threats are accelerating faster than regulatory and technical defenses, placing governments, businesses, and public infrastructure under increasing strain. The warnings come as artificial intelligence becomes deeply embedded across financial systems, healthcare networks, transportation, and national security platforms throughout Europe and beyond.

Experts gathering in Brussels described the current moment as a turning point, where artificial intelligence is no longer a future risk but an active factor reshaping the global cyber threat environment.

Artificial Intelligence Becomes a Core Security Concern

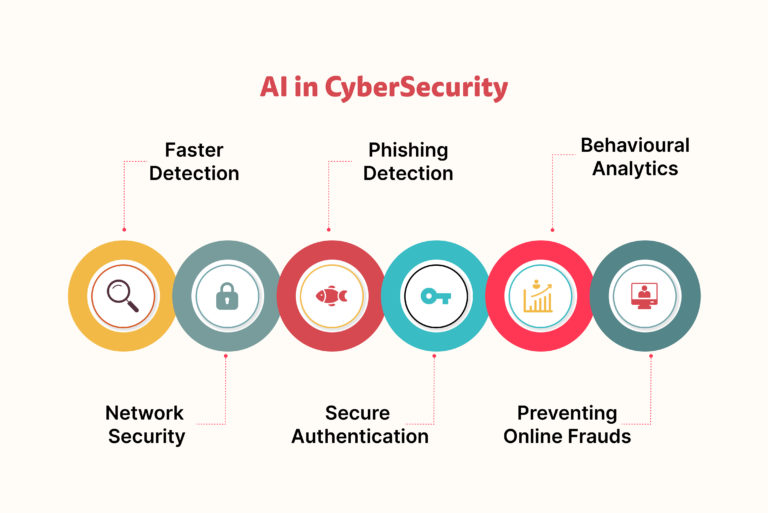

Artificial intelligence has moved rapidly from experimental technology to operational backbone. Automated decision systems now control data flows, optimize logistics, and manage critical digital processes at scale. While efficiency gains are significant, the expansion has introduced new attack vectors that traditional cyber-security frameworks were not designed to handle.

Researchers emphasize that AI cybersecurity threats arise not only from external attackers but also from design flaws, data vulnerabilities, and insufficient oversight during deployment. As systems grow more autonomous, small weaknesses can cascade into large-scale failures.

Why Brussels Has Emerged as a Focal Point

Brussels has become a central hub for discussions on digital governance, hosting European institutions and international policy forums. The city’s role in shaping technology regulation has drawn global attention to how artificial intelligence risks are addressed at a policy level.

In 2026, Brussels-based researchers and regulators are increasingly focused on AI cybersecurity threats as a shared challenge that crosses borders, industries, and political systems. Officials argue that fragmented national approaches are insufficient for technology that operates globally.

How Cyber-criminals Are Adapting to AI Systems

Cybercriminal networks are evolving alongside artificial intelligence. Automated tools now allow attackers to scan for vulnerabilities, adapt malware in real time, and scale operations with minimal human intervention.

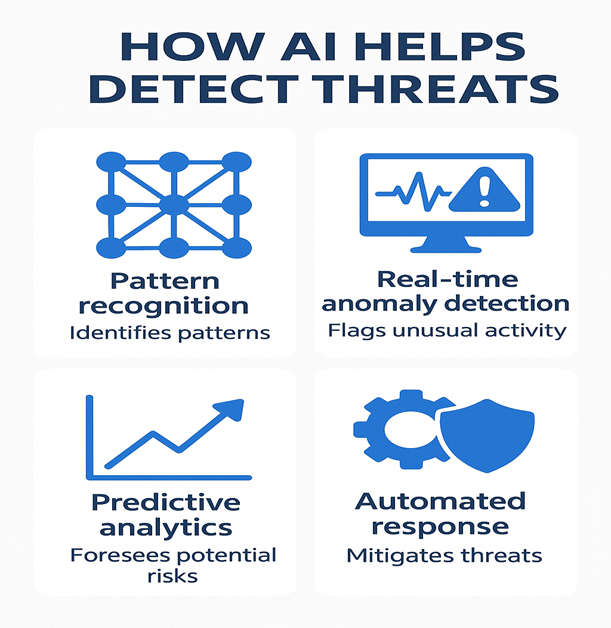

Security analysts warn that AI cybersecurity threats enable faster reconnaissance and more targeted attacks. By analyzing vast datasets, malicious actors can identify weak points in networks that would take humans months to uncover manually.

Threats to Financial and Economic Stability

Financial institutions increasingly rely on AI-driven systems for fraud detection, trading, and risk management. While these systems improve efficiency, they also present attractive targets for cyber attacks.

Researchers caution that AI cybersecurity threats targeting financial infrastructure could trigger cascading disruptions, affecting markets, consumer trust, and economic stability. Even brief system outages can result in significant financial losses.

Risks Facing Healthcare and Public Services

Healthcare systems have embraced artificial intelligence to support diagnostics, patient management, and resource allocation. These systems often process highly sensitive personal data.

Experts highlight that AI cybersecurity threats in healthcare could lead to data breaches, service interruptions, or manipulated outputs that compromise patient safety. Public services, including emergency response and social systems, face similar vulnerabilities.

Critical Infrastructure Under Growing Pressure

Energy grids, transportation networks, and water systems are increasingly managed through AI-assisted platforms. These systems are designed for efficiency but can become single points of failure if compromised.

According to researchers, AI cybersecurity threats targeting critical infrastructure pose some of the most serious risks, as digital attacks could translate into physical consequences affecting millions of people.

The Role of Open Development Ecosystems

Open development has accelerated artificial intelligence innovation by allowing researchers to share models, datasets, and methodologies. However, openness also reduces barriers for malicious use.

Security experts argue that AI cyber-security threats are amplified when powerful tools are released without sufficient safeguards. Once publicly available, systems can be modified and redeployed beyond the control of original developers.

Gaps in Regulation and Oversight

Despite growing awareness, regulatory frameworks have struggled to keep pace with AI development. Existing cyber-security laws often fail to address the unique characteristics of autonomous and learning systems.

In Brussels, policymakers acknowledge that AI cyber-security threats require new approaches combining technical standards, legal accountability, and cross-sector cooperation. Efforts are underway to align national regulations with broader European strategies.

Industry Efforts to Strengthen AI Security

Technology companies are investing in defensive measures such as red-team testing, adversarial simulations, and continuous monitoring of AI behavior. Some firms have established dedicated AI security units to address emerging risks.

Despite progress, industry leaders concede that AI cybersecurity threats evolve faster than defensive capabilities. Collaboration between competitors, once rare, is becoming more common as shared risks intensify.

Ethical Dimensions of AI Security

Beyond technical concerns, artificial intelligence raises ethical questions related to surveillance, privacy, and decision-making authority. Misuse of AI systems can erode public trust even in the absence of direct cyber attacks.

Researchers stress that addressing AI cybersecurity threats also means ensuring transparency and accountability in how systems are designed and deployed. Ethical governance is increasingly viewed as a security requirement rather than a separate consideration.

International Cooperation and Intelligence Sharing

Cyber threats transcend national boundaries, making international coordination essential. European institutions are working with global partners to improve information sharing and joint response mechanisms.

Experts argue that AI cybersecurity threats demand cooperative frameworks that allow rapid exchange of threat intelligence, best practices, and technical expertise across borders.

Preparing the Workforce for AI Security Challenges

A shortage of skilled cyber-security professionals remains a significant obstacle. AI systems require specialized knowledge to secure, monitor, and audit effectively.

Educational institutions and governments are expanding training programs to address AI cybersecurity threats, aiming to build a workforce capable of responding to increasingly complex risks.

The Economic Cost of Inaction

Failure to address AI-related risks could carry substantial economic costs. Disruptions to digital services, intellectual property theft, and loss of consumer confidence all have long-term implications.

Analysts warn that ignoring AI cybersecurity threats could undermine the very productivity gains artificial intelligence promises to deliver.

Lessons From Past Technology Transitions

Previous technological shifts, from the rise of the internet to mobile computing, revealed security gaps only after widespread adoption. Artificial intelligence is following a similar pattern, but at a much faster pace.

Researchers believe that learning from past mistakes is critical to managing AI cybersecurity threats before they become systemic.

A Single Voice From the Research Community

One senior cyber-security researcher told conference attendees,

“Artificial intelligence is redefining digital risk, and security must be designed into these systems from the very beginning, not added as an afterthought.”

The comment captured the urgency shared across academic and policy circles.

Building Resilience Into AI Systems

Resilience, rather than absolute security, is emerging as a guiding principle. Systems must be able to detect, withstand, and recover from attacks without catastrophic failure.

Experts argue that reducing AI cybersecurity threats requires layered defenses, continuous testing, and realistic threat modeling that reflects real-world conditions.

Public Trust and Democratic Stability

Public confidence in digital systems is closely linked to democratic stability. If citizens believe technology is unsafe or unaccountable, resistance to innovation grows.

Addressing AI cybersecurity threats transparently can help maintain trust and ensure that artificial intelligence supports, rather than undermines, social cohesion.

A Defining Year for AI Risk Governance

As discussions intensify in Brussels, 2026 is shaping up to be a defining year for how artificial intelligence risks are governed. Decisions made now will influence technology policy for decades.

The focus on AI cybersecurity threats reflects a broader recognition that innovation and security must advance together in an increasingly connected world.

Beyond Technology Toward Collective Responsibility

Ultimately, artificial intelligence security is not solely a technical issue. It involves institutions, laws, cultures, and shared values.

By confronting AI cybersecurity threats through cooperation and foresight, policymakers and industry leaders hope to guide artificial intelligence toward outcomes that strengthen, rather than endanger, global digital society.